About This Project

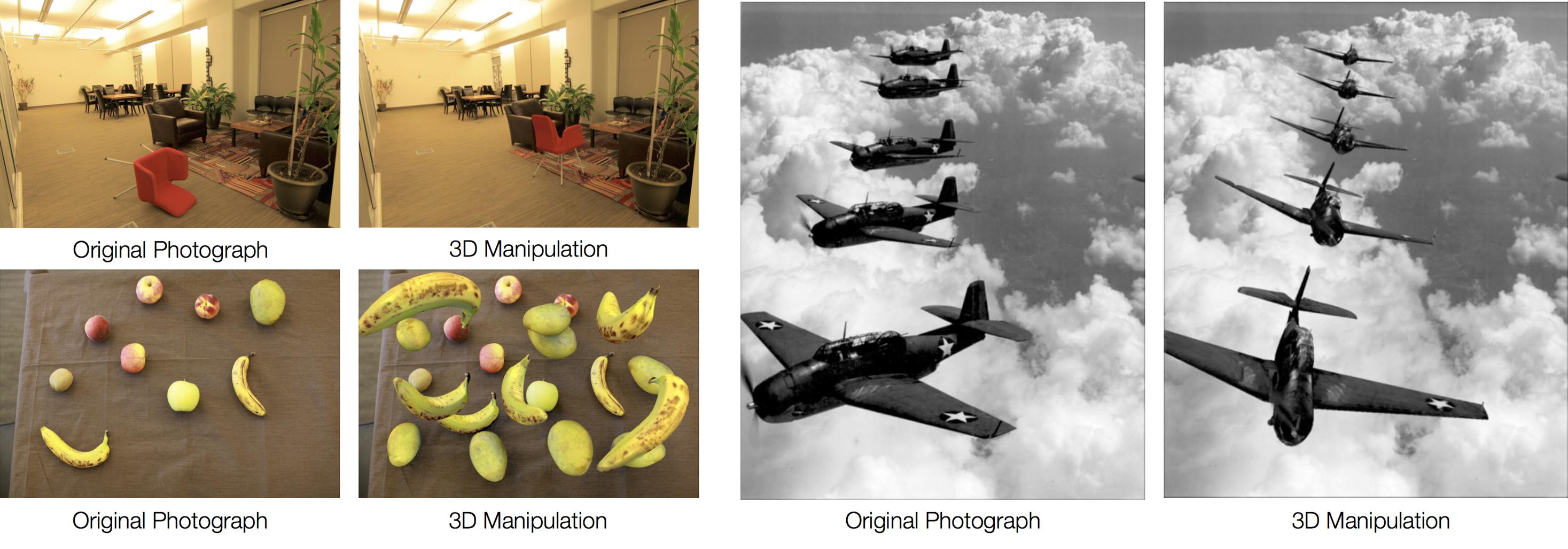

Photo-editing tools have greatly expanded the creative control people have over photographs, however, they are largely restricted to 2D pixels of the photo. We present a system that allows users to perform the full range of 3D manipulations to objects in a photo, such as lifting, flipping, deforming, and duplicating in 3D. Our approach uses 3D models from online databases to allow the user to reveal new parts of objects hidden in the photograph.Ask the Scientists

Join The DiscussionWhat is the context of this research?

Traditional photo-editing tools provide a wide variety of edits such as copy-paste, moving, scaling, and in-painting. However, these tools are fundamentally limited to the 2D plane of the photograph. People are more used to dealing with objects in the real world in a hands-on manner, in 3D.

Prior work in providing 3D control to objects in photographs has been limited to simple geometric structures such as cuboids and cylinders. We are the first to provide full range 3D control over a large range of objects in photographs, with realistic lighting, while revealing novel non-symmetric parts such as the underside of the taxi cab in the overview. We use 3D models to reveal new parts, as they know the complete geometry and appearance of the object even in parts hidden from the camera view.

What is the significance of this project?

By providing full range 3D control over objects in photos, we can allow users to realize their imagination in a manner never seen before today. With a mere click of a button or swipe of a finger, users will be able to flip cars over, create stop motion animations of suspended objects, and even manipulate historical photographs, such as the airplanes in the overview.

We have already obtained interest from the real-estate domain, the 3D printing industry, clothing catalog designers, and marketing content creators to perform 3D manipulation of photographs post-capture. We also foresee a contribution toward forensics: by tying physics simulation software to crime-scene photographs, we can realistically reconstruct possible scenarios.

What are the goals of the project?

Our approach uses a 3D model for an object in a photo to initialize the estimate of geometry, lighting, and appearance for hidden parts of the object. The 3D model rarely matches a photographed object due to differences in instances (no two apples are the same), deformation of objects such as clothes, and artistic freedom in creating 3D models. Our immediate goals to address this challenge are:

- Automated alignment of 3D model geometry, lighting estimation, and appearance completion to hidden areas. We have performed the last two tasks, and are currently working on automated alignment.

- Fast estimation and rendering for interactive manipulation.

Budget

We are requesting funding to purchase Tesla K40 GPUs that have 2880 cores and 12GB to automatically align the 3D model of an object to the photograph. We exhaustively search for the best alignment using thousands of patches, taken from high-resolution renders of the 3D model at hundreds of thousands of viewpoints and scales. To efficiently search in this enormous space, we need GPUs with very high processing power and with significantly large memory to store all the renders simultaneously.

We have a prototype that allows users to manipulate objects in photographs in 3D using 3D model of the objects. A video of the prototype can be viewed here. Since the 3D model rarely matches the photo, our approach automatically estimates lighting and hidden appearance, however, it requires the user to manually align the geometry. Manual alignment is cumbersome for most users. Our GPU-accelerated automatic alignment will allow users to perform 3D manipulation of photographs without manual effort.

Meet the Team

Team Bio

Natasha Kholgade Banerjee is a Ph.D. student in the Robotics Institute at Carnegie Mellon University, where she is advised by Yaser Sheikh. She received her M.S. and B.S. degrees in 2009 from the Department of Computer Engineering at Rochester Institute of Technology under the supervision of Andreas Savakis. Her research lies at the intersection of computer graphics and computer vision. Her interest in performing research on manipulating photographs in 3D came about when she was searching for exciting ways to have her origami models come to life. Her work on editing photographed objects in 3D has received Popular Science magazine's Best of What's New award in Software for 2014, and has received significant press attention, some of which includes the New York Times, Gizmodo, TechCrunch, and Wired UK. In her spare time, Natasha enjoys shooting photographs, cooking, sewing, and doing origami.Lab Notes

Nothing posted yet.

Press and Media

We have been featured in Popular Science magazine's Best of What's New list of top 100 innovations for 2014!

More information can be found at our press page.

Additional Information

Here is an example of a 3D copy-paste on the photograph of NYC taxi cabs. The cab at the top has been duplicated in various parts of the photograph to create a pile-up. For the upturned cab on the left, our approach allows the user to reveal the underside of the taxi cab, as it uses a full 3D model of the taxi cab.

Using our method, users can realign furniture, create dynamic compositions of objects, such as the fruit, suspended in the air, and even perform manipulations to historical photographs, such as realigning the TBF Avengers in the World War II photograph to fly toward the camera. For more examples and to see the current version of our software, please visit our webpage.

Project Backers

- 18Backers

- 14%Funded

- $1,673Total Donations

- $92.94Average Donation